The Conflicting Messages Behind “Are You OK?”

Post the word “suicide” on Facebook and watch what happens. It happened to me last night. Not that I am suicidal; I just used the word in a comment on a post.

Meta’s digital guardian angel immediately swoops in: “It looks like you’re going through something difficult. Here are resources that may help.”

Hotlines, chat support, crisis links. For a brief moment, you could almost believe the platform is your concerned wingman checking on you after a bad night.

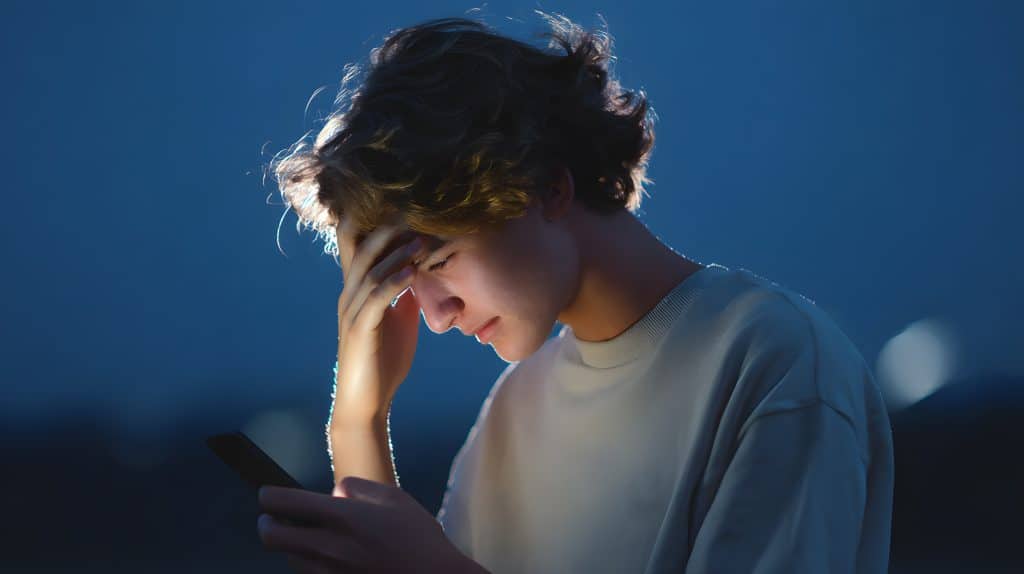

Then you swipe your thumb. And the very same platform that just asked if you’re okay throws you back into an algorithmic blast furnace of political rage bait, war footage, trauma-dump reels, mass-shooting updates, and influencer-manufactured despair.

It’s like your toxic ex telling you they’ve changed… and then keying your car five minutes later.

This raises the obvious question:

Does Facebook/Meta actually care about you?

Or does it just care about being able to say it cares about you?

Short answer: Meta cares about your behavior, not your well-being.

Long answer: it’s complicated, ugly, and every bit as contradictory as you’ve suspected.

The “We Care About You” Side

To be fair, Meta has built legitimate suicide-prevention tools:

• AI scans posts, comments, and livestreams for signs of self-harm.

• High-risk signals get routed for human review or automatically trigger resource prompts.

• Friends can report concerning posts and trigger wellness checks.

• Meta publicly touts these tools anytime Congress or a news crew starts sniffing around.

This is the glossy brochure version of Facebook — the one they break out when lawmakers or attorneys general start asking uncomfortable questions.

And to be clear, these tools have helped real people. Some users have been connected to crisis support because someone saw a concerning post and Facebook escalated it. That part is real.

But here’s the part Meta hopes you never put next to it in the same paragraph:

These safety tools exist inside the belly of an algorithm designed to exploit the exact emotional states they claim to protect you from.

The Feed That Doesn’t Care At All

Facebook’s algorithm doesn’t prioritize your mental health. It prioritizes your engagement.

Platforms like Facebook are built on a single, unwavering principle:

Your attention is the product.

And nothing — absolutely nothing — holds attention like negative emotional content.

That’s why doomscrolling is so common. The feed rewards itself for making you anxious, angry, hopeless, or suspicious. You stay longer. You click more. You scroll deeper.

And the algorithm learns:

“Oh, you lingered on that post about someone’s mental breakdown? Here’s 500 more.”

“Oh, you read something about suicide? Clearly you like emotionally intense content. Let me help you spiral more efficiently.”

“Oh, you paused on a trauma-dump reel? Strap in — we’ve got a whole genre of that.”

The ugly truth is simple:

Your mental vulnerability is profitable.

Your despair has high engagement.

Your hopelessness has a strong retention curve.

Your emotional pain is extremely monetizable.

And that’s the conflict at the heart of Meta’s entire ecosystem.

When Corporate Liability Meets Algorithmic Addiction

Meta has two competing missions:

Mission 1: Look Like the Responsible Adult in the Room

This is where the suicide-prevention pop-ups come from.

It’s also where crisis-resource partnerships and public blog posts come from.

This mission is driven by:

• Fear of lawsuits

• Fear of regulation

• Fear of turning into the next congressional punching bag

• A desire to protect the brand

When you type “suicide” and Facebook gently asks if you’re okay, that’s this mission talking.

Mission 2: Keep You Scrolling No Matter What

This is the real Meta — the one that prints money.

It’s cold, efficient, and borderline predatory.

That mission is driven by:

• Ad revenue

• Retention metrics

• Engagement targets

• Growth imperatives

This mission does not care if the thing keeping you scrolling is healthy or emotionally catastrophic.

These two missions constantly crash into each other.

That’s why you see a suicide-prevention message one moment…

and 30 seconds later you’re watching a reel of someone explaining why humanity is doomed.

Meta built a smoke detector inside a building made of gasoline and scented candles.

And it acts surprised that the alarm keeps going off.

When Vulnerability Becomes a Recommendation Signal

Here’s where it gets darker.

Experiments, watchdog investigations, academic studies, and legal filings have revealed a pattern that should disturb anyone:

Once an account engages with self-harm or depressive content, social media algorithms begin recommending more of it. Often aggressively.

Not because they want you to hurt yourself.

Because you paused to read it.

Because you scrolled slowly.

Because you watched for four seconds instead of two.

To a machine built for one purpose, that behavior says:

“This content keeps the human here. Show them more.”

This is not care.

This is not support.

This is not compassion.

This is exploitation of emotional vulnerability disguised as personalization.

And it is absolutely intentional design — even if no individual engineer set out to cause harm.

The Filter Bubble Becomes a Psychological Bubble

A decade ago, experts warned about algorithmic “filter bubbles” that shaped your worldview.

Today, you get something worse:

Mood bubbles.

If you’re happy, you get more happy content.

If you’re angry, you get more outrage.

If you’re hopeless, you get more despair.

If you’re vulnerable, you get a feedback loop of vulnerability.

Combine this with posting the word “suicide,” and you get a bizarre digital contradiction:

Meta pops up to ask if you’re okay…

then hands you a personalized playlist of the emotional equivalent of a deployment sandstorm filled with broken glass.

So Does Meta Care About You?

Meta cares about:

• Liability

• Public relations

• Avoiding regulation

• Avoiding lawsuits

• Brand image

• Revenue

• Market share

Meta does not fundamentally care whether its feed makes your mental health better or worse — because if your pain keeps you on the app, the system is already doing what it was designed to do.

Meta cares just enough to help you survive the app.

It does not care enough to make the app healthy.

When you type “suicide,” the platform shows you concern.

When you keep scrolling, it shows you the truth.

If You’re Ever the One Typing That Word

Take the resources.

Call the hotline.

Talk to an actual human.

But do not mistake Facebook’s automated concern for actual, ongoing safety.

The suicide-prevention pop-up is not your buddy.

It’s a corporate fire extinguisher in a building designed to burn slowly forever.

Closing the app and calling a real person will always do more for you than scrolling one more time.

TLDR Version: Limit your time on social media to 30 minutes a day, talk to people you know, read a book, or take up a hobby. You have been warned.

References

• Meta/Facebook AI Suicide Prevention Announcement, 2018 – About Facebook Newsroom.

• Business Insider report on Facebook’s suicide-risk scoring system, 2018.

• O’Dea et al., “The Role of AI in Suicide Prevention” – Philosophy & Technology, Springer.

• Elhai et al., “Doomscrolling and Mental Health” – International Journal of Environmental Research and Public Health.

• Harvard Health Publishing, “Doomscrolling: Why We Do It and How to Stop.”

• Haugen, F. – The Facebook Papers / whistleblower disclosures.

• Legal filings alleging Meta buried internal mental-health harm studies (2025 court disclosures).

• Molly Russell Inquest Findings – UK Coroner’s Report and coverage by BBC, Guardian, etc.

• Molly Rose Foundation / Center for Countering Digital Hate joint research reports on harmful recommendation loops (2024–2025).

• Panoptykon Foundation, “Facebook Won’t Let You Forget Your Anxiety” experiment.

• Guardian Australia investigation into blank-account algorithm tests (2024).

• U.S. lawsuits alleging algorithm-driven harm to minors, nationwide multidistrict filings (2023–2025).

• Wired Magazine reporting on recommender systems and self-harm content promotion.

• Eli Pariser, “The Filter Bubble,” 2011.

_____________________________

Dave Chamberlin served 38 years in the USAF and Air National Guard as an aircraft crew chief, where he retired as a CMSgt. He has held a wide variety of technical, instructor, consultant, and leadership positions in his more than 40 years of civilian and military aviation experience. Dave holds an FAA Airframe and Powerplant license from the FAA, as well as a Master’s degree in Aeronautical Science. He currently runs his own consulting and training company and has written for numerous trade publications.

His true passion is exploring and writing about issues facing the military, and in particular, aircraft maintenance personnel.

As the Voice of the Veteran Community, The Havok Journal seeks to publish a variety of perspectives on a number of sensitive subjects. Unless specifically noted otherwise, nothing we publish is an official point of view of The Havok Journal or any part of the U.S. government.

Buy Me A Coffee

The Havok Journal seeks to serve as a voice of the Veteran and First Responder communities through a focus on current affairs and articles of interest to the public in general, and the veteran community in particular. We strive to offer timely, current, and informative content, with the occasional piece focused on entertainment. We are continually expanding and striving to improve the readers’ experience.

© 2026 The Havok Journal

The Havok Journal welcomes re-posting of our original content as long as it is done in compliance with our Terms of Use.